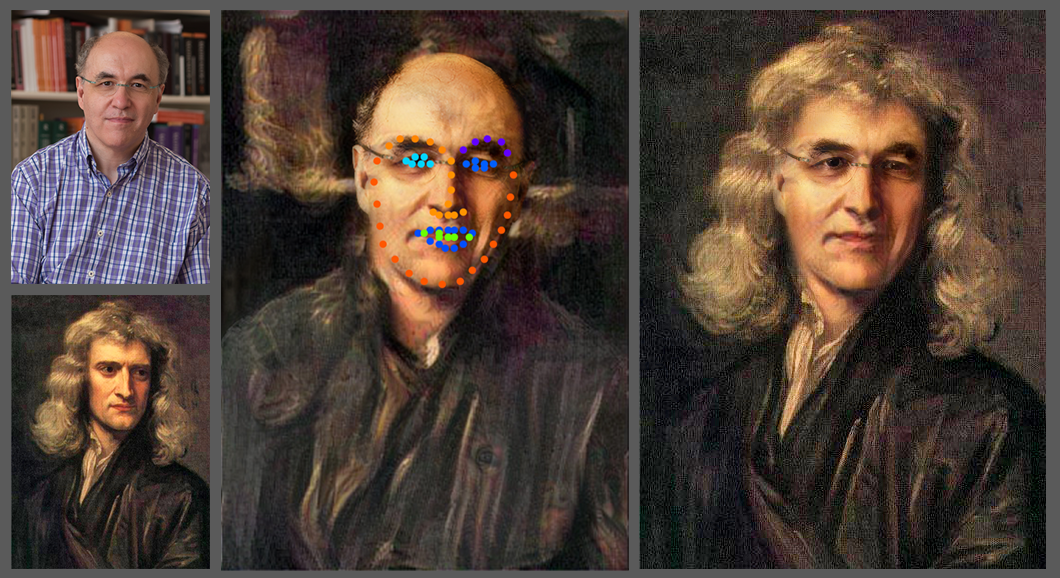

Filters can be used to swap faces on people but deepfakes take it a step further by making these swaps – photo or audio – realistic. These deepfakes are entering the mainstream media quickly as AI becomes more popular.

A deepfake is a video, audio clip, or photo that looks or sounds real but has been manipulated by AI, normally using a deep data library. These deepfakes have entered the mainstream media in recent years and have improved immensely from their origins. While deepfakes can be fun, they can also be harmful in many ways.

In her article, “The Distinct Wrong of Deepfakes,” Adrienne de Ruiter writes, “The ability to produce realistic looking and sounding video or audio files of people doing or saying things they did not do or say brings with it unprecedented opportunities for deception.”

Now these deepfakes can be dangerous and are currently degrading the trustworthiness of content online. News can be faked easily by swapping faces and synthesizing voices. These deepfakes are relatively easy to detect even by the untrained eye through small errors:

- Unnatural eye movements, facial expressions, and skin tone

- Lack of emotion

- Teeth that don’t look real

These errors can be better seen by slowing down the video or observing closely but your best tool would be to reverse image search to try and find the original content. You can do this by going to Google, clicking on the camera button on the search bar, and dragging the image to the box.

This means that our best defense against this technology is to check sources diligently to catch them without dealing with potentially convincing fakes. This defense should work against all deepfakes, including audio deepfakes, which are often used in over-the-phone scams but can also be found with video deepfakes.

There is no limit to who a deepfake can affect. High school students will probably be better off dealing with this threat though, as they have more experience with the internet. However, if high school students are careless and don’t check sources, they can still be fooled.

The scams mentioned often affect the older generation because they do not know about the existence of deepfakes or will underestimate their capabilities. The older generation is usually susceptible to the audio deepfake scams that work to impersonate trusted individuals and create an urgent situation that clouds the victim’s judgment. These scams can appear in the form of a relative urgently asking for a transfer of money, a boss asking for money, or company requiring a bill be paid immediately.

These issues currently don’t have many laws combatting them, but California is ahead of most states with Bill 730 going against deepfakes of candidates for office within 60 days of an election. This helps to protect the fairness of elections as deepfakes could be used to damage a candidate’s reputation without reason.

Bill 602 is another California bill that allows the victim in a non-consensual explicit deepfake right to private action and can receive payment for damages. This means that someone who had their likeness used in a video or image of an explicit nature they didn’t consent to can take action against the creator in California as these videos can damage a person’s image.

Federal legislation does exist, but is currently not very effective, requiring a watermark on AI-generated content that can be pretty easy to remove and add. Researchers at the University of Maryland were able to remove the current watermarking methods and even add watermarks to images not generated by AI.

Overall, we just need to be vigilant about checking sources and being skeptical of the media we find online as we are entering an exciting and terrifying new age of great creativity with equally great misinformation.

To read more opinion articles, visit Column: Israel-Hamas conflict unpacked and Column: The gift of FCS.